Playwright Web Scraping Tutorial

Introduction

Hello my friend.

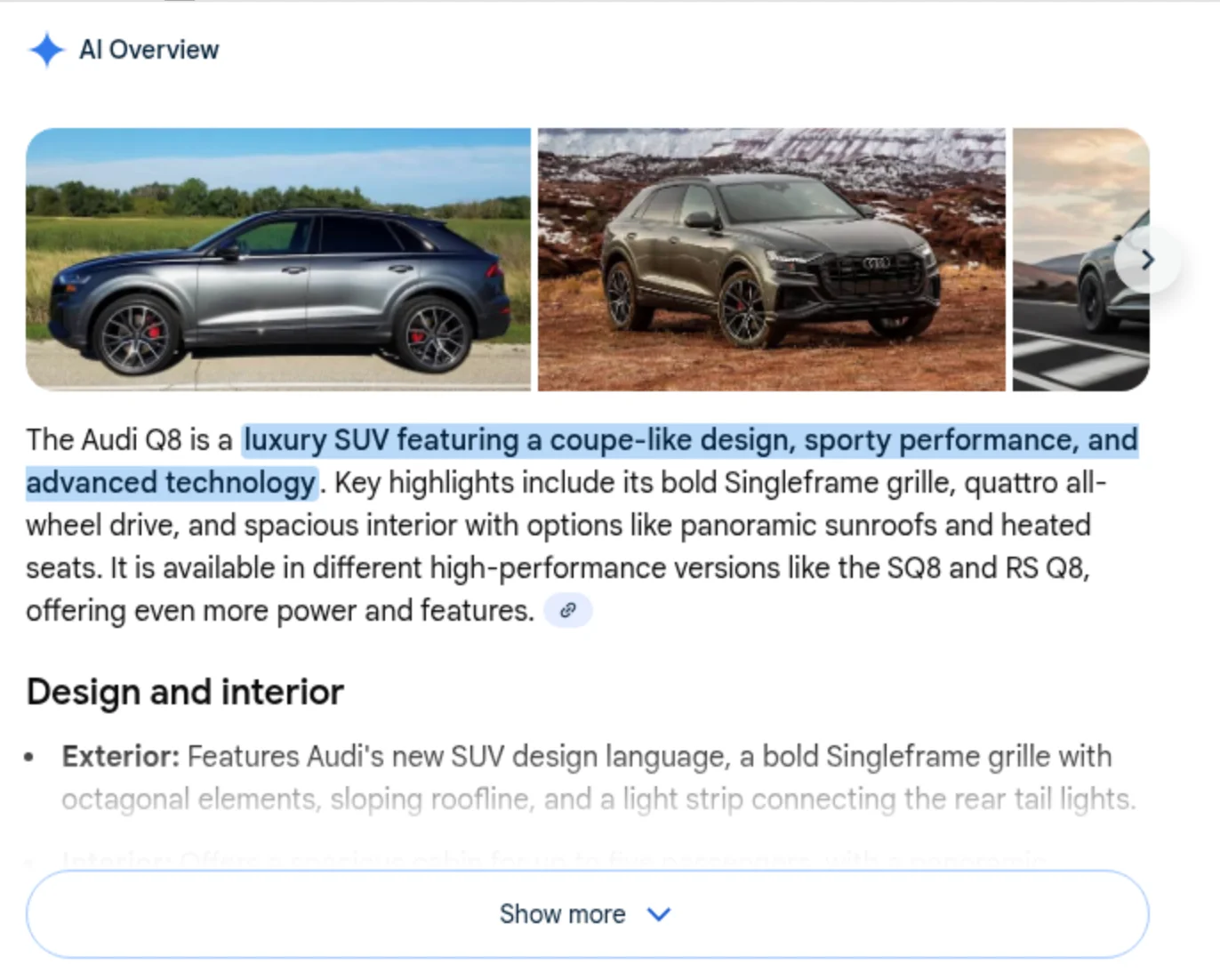

In this tutorial, we'll build a working scraper using Playwright and Node.js to collect Google search results for a specific query — Audi Q8.

You'll learn how to install Playwright, write your first scraping script, fetch structured data, scrape multiple pages, and even add proxy support to avoid getting blocked.

By the end, you'll have a real-world scraper that works across pages — and you'll also understand why managing and maintaining scrapers at scale can be tricky without dedicated infrastructure.

What is Playwright?

Playwright is an open-source browser automation framework developed by Microsoft. It allows developers to control Chromium, Firefox, and WebKit browsers with a single API.

It started as a testing framework but quickly became one of the most reliable tools for automation and scraping. Unlike older libraries that interact through the DOM only, Playwright controls browsers directly — meaning it can load, interact with, and extract data from any modern web application, no matter how dynamic it is.

Here’s what makes it stand out:

- Works with real browsers — not headless HTML parsers.

- Handles JavaScript-heavy pages naturally.

- Offers network-level control for intercepting, blocking, or mocking requests.

- Built for speed, stability, and reliability.

It’s the kind of tool you reach for when axios and cheerio can’t see the content because it’s rendered dynamically.

Features of Playwright and Why Choose It

There are plenty of browser automation tools, but Playwright’s feature set makes it a clear choice for modern scraping:

Cross-Browser Automation

Playwright supports Chromium, Firefox, and WebKit — the core engines behind Chrome, Firefox, and Safari. You can write one script and run it on all of them.

Auto-Waiting

One of the most frustrating parts of scraping is waiting for elements to load. Playwright automatically waits for the DOM to be ready before interacting with elements.

Stealth-Like Behavior

Because Playwright controls real browsers, it behaves much closer to an actual human browsing session. This makes it harder for websites to detect and block compared to basic HTTP scrapers.

Network Interception

You can capture or modify requests and responses. This helps with debugging, scraping APIs directly, or skipping unnecessary content like ads or analytics scripts.

Screenshot and PDF Support

Playwright can take full-page screenshots or export PDFs, making it useful for monitoring or reporting tasks.

Community and Reliability

Backed by Microsoft and supported by a strong open-source community, Playwright gets frequent updates and improvements, which makes it more stable and future-proof than many alternatives.

In short, if you’re starting a web scraping project in 2025, Playwright is the tool you can trust to actually work.

Installing Playwright

Before writing any code, let’s set up your environment properly.

Step 1. Install Node.js

Playwright works best with Node.js (v18 or higher).

Download and install from nodejs.org.

Check your installation:

node -v

npm -v

If both commands return version numbers, you’re good to go.

Step 2. Create a Project

Set up a new folder and initialize a Node project:

mkdir playwright-scraper

cd playwright-scraper

npm init -y

Step 3. Install Playwright

Install Playwright and its browser binaries:

npm install playwright

npx playwright install

Step 4. Verify Installation

You can check if Playwright is ready with:

npx playwright codegen https://example.com

This command opens an interactive browser session and records actions into code.

If it runs successfully, your setup is complete.

Scraping Google Search Results with Playwright

To make this tutorial practical, we’ll scrape Google Search results for the query "Audi Q8".

Why Google?

Because it’s a perfect real-world example — it loads dynamic content, changes HTML structure frequently, and has pagination. You’ll face the same challenges here as you would when scraping any complex website (news, e-commerce, etc.).

Our goal:

- Open Google

- Search for

"Audi Q8" - Extract the result titles, links, and snippets

- Return structured data

- Extend it to multiple pages

- Add proxy support later

By the end, you’ll have a clean Node.js script that prints formatted search results right in your terminal.

Step 1: Building a Simple Scraper

Create a new file called google-scraper.js and add:

import { chromium } from 'playwright';

const query = 'Audi Q8';

const url = `https://www.google.com/search?q=${encodeURIComponent(query)}`;

const browser = await chromium.launch({ headless: true });

const page = await browser.newPage();

await page.goto(url, { waitUntil: 'domcontentloaded' });

await page.waitForSelector('div#search');

const results = await page.evaluate(() => {

const items = [];

document.querySelectorAll('div#search .tF2Cxc').forEach(el => {

const title = el.querySelector('h3')?.innerText || '';

const link = el.querySelector('a')?.href || '';

const snippet = el.querySelector('.VwiC3b')?.innerText || '';

if (title && link) items.push({ title, link, snippet });

});

return items;

});

console.log(results);

await browser.close();

Run it with:

node google-scraper.js

This script launches a headless browser, navigates to Google, performs the query, and logs structured search results to the console.

Step 2: Fetching and Returning Data

Let’s make the output easier to read. Instead of dumping raw objects, we’ll format the results as a table and optionally save them to a JSON file.

import fs from 'fs';

import { chromium } from 'playwright';

const query = 'Audi Q8';

const url = `https://www.google.com/search?q=${encodeURIComponent(query)}`;

const browser = await chromium.launch({ headless: true });

const page = await browser.newPage();

await page.goto(url, { waitUntil: 'domcontentloaded' });

await page.waitForSelector('div#search');

const results = await page.evaluate(() => {

const data = [];

document.querySelectorAll('div#search .tF2Cxc').forEach(el => {

const title = el.querySelector('h3')?.innerText || '';

const link = el.querySelector('a')?.href || '';

const snippet = el.querySelector('.VwiC3b')?.innerText || '';

if (title && link) data.push({ title, link, snippet });

});

return data;

});

console.table(results);

fs.writeFileSync('results.json', JSON.stringify(results, null, 2));

await browser.close();

Run the script again. You’ll now get formatted data and a results.json file with the same information.

Step 3: Scraping Multiple Pages

To scrape multiple pages, modify the script to loop through the first few pages.

import fs from 'fs';

import { chromium } from 'playwright';

const query = 'Audi Q8';

const url = `https://www.google.com/search?q=${encodeURIComponent(query)}`;

const browser = await chromium.launch({ headless: true });

const page = await browser.newPage();

let allResults = [];

for (let pageNum = 0; pageNum < 3; pageNum++) {

const pageUrl = pageNum === 0 ? url : `${url}&start=${pageNum * 10}`;

await page.goto(pageUrl, { waitUntil: 'domcontentloaded' });

await page.waitForSelector('div#search');

const results = await page.evaluate(() => {

const items = [];

document.querySelectorAll('div#search .tF2Cxc').forEach(el => {

const title = el.querySelector('h3')?.innerText || '';

const link = el.querySelector('a')?.href || '';

const snippet = el.querySelector('.VwiC3b')?.innerText || '';

if (title && link) items.push({ title, link, snippet });

});

return items;

});

allResults = allResults.concat(results);

}

console.table(allResults);

fs.writeFileSync('results.json', JSON.stringify(allResults, null, 2));

await browser.close();

Now your scraper collects results from multiple pages of Google Search for "Audi Q8".

Step 4: Using a Proxy

Scraping Google (or any popular site) repeatedly from a single IP address can lead to rate limits or temporary blocks.

To stay safe and stable, use a proxy server.

import { chromium } from 'playwright';

const browser = await chromium.launch({

headless: true,

proxy: {

server: 'http://proxy-server.com:8000',

username: 'user123',

password: 'pass123'

}

});

Rotating proxies let you run your scraper across regions or at scale without worrying about bans.

Results

Once you run your scraper with pagination and proxy support, you’ll get structured search data printed neatly in your terminal and saved as results.json.

Example output:

┌─────────┬─────────────────────────────────────┬──────────────────────────────┐

│ (index) │ title │ link │

├─────────┼─────────────────────────────────────┼──────────────────────────────┤

│ 0 │ 'Audi Q8 2025 SUV – Full Review' │ 'https://www.caranddriver…' │

│ 1 │ 'Official Audi Q8 Page' │ 'https://www.audi.com/q8' │

└─────────┴─────────────────────────────────────┴──────────────────────────────┘

Conclusion

We’ve built a full Playwright web scraper from scratch — step by step:

- Installed and configured Playwright

- Loaded and scraped Google Search results

- Fetched, formatted, and saved structured data

- Scaled across multiple pages

- Added proxy support for stability

Playwright is powerful, flexible, and modern. It handles dynamic pages, complex selectors, and network conditions with ease. But as your scraping needs grow, so does the complexity of keeping everything stable.

Managing proxies, solving CAPTCHAs, and scaling infrastructure manually takes time. That’s where ScrapingForge helps.

ScrapingForge gives you a ready-to-use, scalable environment with rotating proxies, CAPTCHA bypass, and geo-targeting — all without changing your Playwright code.

How to Bypass CreepJS Browser Fingerprinting

Bypass CreepJS detection using Puppeteer, Playwright, Selenium, chromedp, and Camoufox. Full guide with Xvfb, screenshots, and anti-fingerprinting techniques.

Web Scraping Steam Store with JavaScript and Node.js

Learn web scraping with JavaScript and Node.js. Step-by-step tutorial to scrape Steam Store for game titles, prices, and discounts using modern Node.js.